My last post was really written primarily as background for this post. TL;DR: there is a lot we're doing right nowadays as software engineers, that we were screwing up badly 20 to 30 years ago.

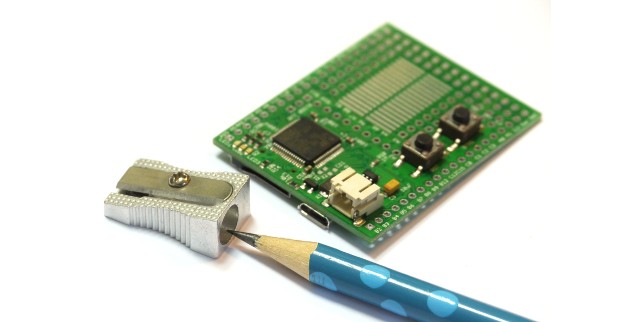

I am moved to write this post because I've been playing with the Espruino board (having pre-ordered one during the Kickstarter campaign), which runs JavaScript close to the metal on an ARM processor.

JavaScript is a cool language because it draws upon a lot of the experience that engineers have gained over decades. Concurrent programming is hard, so JavaScript has a bullet-proof concurrency model. Every function runs undisturbed to completion, and communication is done by shared-nothing message passing. In the browser, JavaScript gracefully handles external events with unpredictable timing. That skill set is exactly what is required for embedded hardware control.

Crockford once referred to JavaScript as "Scheme with C syntax". JavaScript has many of the features that distinguish Lisp and its derivatives, and which set apart Lisp as probably the most forward-looking language ever designed.

This video is Gordon Williams, the creator of the Espruino board, speaking at a NodeJS conference in the UK. One of the great things he did was to write code that can run on several different boards. I'm particularly interested in installing it on the STM32F4 Discovery board because it costs very little and looks pretty powerful as ARM microcontrollers go. There is a Youtube playlist that includes these videos and others relating to the Espruino board.

I am moved to write this post because I've been playing with the Espruino board (having pre-ordered one during the Kickstarter campaign), which runs JavaScript close to the metal on an ARM processor.

JavaScript is a cool language because it draws upon a lot of the experience that engineers have gained over decades. Concurrent programming is hard, so JavaScript has a bullet-proof concurrency model. Every function runs undisturbed to completion, and communication is done by shared-nothing message passing. In the browser, JavaScript gracefully handles external events with unpredictable timing. That skill set is exactly what is required for embedded hardware control.

Crockford once referred to JavaScript as "Scheme with C syntax". JavaScript has many of the features that distinguish Lisp and its derivatives, and which set apart Lisp as probably the most forward-looking language ever designed.

This video is Gordon Williams, the creator of the Espruino board, speaking at a NodeJS conference in the UK. One of the great things he did was to write code that can run on several different boards. I'm particularly interested in installing it on the STM32F4 Discovery board because it costs very little and looks pretty powerful as ARM microcontrollers go. There is a Youtube playlist that includes these videos and others relating to the Espruino board.